In an earlier chapter, What is Knowledge?, we distinguished between different uses of the word ''knowledge'': 'knowledge that', 'knowledge how', and 'knowledge by acquaintance'. And it is quite obvious that to express the first kind of knowledge requires language: what it is that someone knows can be expressed in a sentence. (That does not mean, of course, that one cannot give evidence of knowing that something is the case without using language: often there are other ways of showing that one knows something.)

We have also already discussed, in the chapter on Foundations of Knowledge, how language, far from being an unproblematic means of expressing thoughts and feelings, can bring with it certain dangers: thus, for example,

- hearing a certain description may actually affect the memory we have of a situation,

-

-

However, language is not only a means for expressing things, but can itself become the object of study, and while speaking is a kind of action, it is a very special kind of action.

1. How words have meaning

A very basic part of speech, that is common to all languages, are names. We use names to talk about (classes of) objects (''dogs'', ''water'') or particular individuals (''John''.) At first, the relation between a name and the object named might appear unproblematic: names are almost randomly chosen, a dog is called ''chien'' in French and ''inu'' in Japanese, and most of us would probably agree that ''That which we call a rose / By any other word would smell as sweet'' (Shakespeare, Romeo and Juliet, II.)

However, when we start to consider examples, it soon turns out that the situation is not always that simple.

Exercise 1.1.:

- Many names were originally descriptions. Simple examples are family names, many of which derive from a feature of the landscape -- "Tanaka" in Japanese is the same as the English "Middlefield" -- or from professions: "Müller" in German is an even more common surname than "Miller" is in English. Less obviously, most given names have meanings too, which can be more or less obvious: the Turkish name "Murad" means "wish", but "Richard" also comes from "good with the spear".

In your group, check who knows what their names mean, or where they come from.

-

For which of the two shapes shown on the right would you choose the name "malumo", and which one would you call "taketa"? Do all the members of the group agree?

For which of the two shapes shown on the right would you choose the name "malumo", and which one would you call "taketa"? Do all the members of the group agree?

Amongst adult speakers (at least of Western-European languages) there is apparently a great deal of conformity in the choice, but that general agreement only develops gradually, between the ages of 4 and 16. 4- and 5-year olds are almost as likely to choose one name as the other for each of these figures.

Peter R. Hofstätter, ed., Fischer Lexikon: Psychologie, 1957.

- In Shakespeare's play, Romeo and Juliet are lovers who cannot be united because they belong to feuding families, (i.e. families that are enemies.)

So when Juliet exclaims: "Romeo, Romeo, wherefore art thou Romeo? / Deny thy father and refuse thy name," is it simply Romeo's name that is the problem? "What's in a name?" as she asks. (KC)

- There is a geological feature in Australia, a large flat-topped hill well-known from photographs, which the aboriginal people call "Uluru", but which the English-speaking settlers named "Ayer's Rock". (KC)

- Think of other examples where a thing has more than one name, such as a person or a country.

- What name should be used for Uluru, or Ayer's Rock, on a map? Should there be only one name? What is the purpose of a name?

- Does it matter which of the two names a speaker uses? Does it say anything about the speaker which name he uses?

[ sense and reference,

meaning is use: e.g. 'bad words' picked up by foreigners here ]

2. Chomsky for Beginners: how sentences have meaning

Having so far considered the vocabulary, or system of lexical items, of a language, we shall now approach its syntactic structure: a set of rules, the rules of grammar, for combining words into sentences. The sense of a grammatical sentence arises from the sense (or meaning) of the words, depending on the way they are put together, and by virtue of its sense the sentence can be true or false, (or in some cases it may serve to perform an action, like asking a question.)

Of course, people have been learning foreign languages throughout history, so the grammars of those languages have been studied for long times, but a general interest in grammar is fairly recent. Linguistics is the study of language in general, even if the evidence always comes from particular languages, and Noam Chomsky is the person who more than any other has defined that field &ndash so much so that the time before Chomsky is referred to as "BC": even linguists who disagree with Chomsky in many respects cannot help being Chomskyans, (in the same way that even a psychoanalyst who considers himself 'anti-Freudian' cannot help being Freudian in fundamental respects.)

Noam Chomsky, an American academic born in 1928 and based at MIT for most of his life, has had three careers:

- as a linguist, who revolutionised his field, in particular with his Syntactic Structures, 1957;

- as a philosopher, who was able to take a holistic view of man and society, in particular in his Problems of Knowledge and Freedom, 1971, based on two lectures; and

- as a 'guru of the Left': from an outspoken opponent to the US involvement in Vietnam, Chomsky has become the best-known 'alternative intellectual'.

A. Linguistics 'B.C.' (= "before Chomsky")

Starting in the early 19th century, the explorers who travelled all over the world not only brought back specimens of butterflies and reports of all kinds of wonders, they also 'collected' exotic cultures and strange languages.

And in the first half of the 20th century, as it became clear that many American Indian languages were about to disappear completely, there was a school of academics (Bloomfield) who did a great deal of research and tried to preserve the evidence for posterity.

The emphasis of this research was largely on speech and sounds, rather than grammar or meaning, i.e. on phonetics, rather than syntax or semantics. In fact the semantics was strongly behaviourist: the meaning of a sentence consists of the situations in which it can be heard. (This goes a lot further than saying that we can often tell the meaning of a sentence from the stuation in which it is used.)

Starting in the 1940ies and 50ies, a greater interest was taken in the grammars of these languages (Harris,) the main technique available being that of parsing sentences: sentences were split into strings of words of different grammatical categories, the way they had been when pupils were taught Latin at school &ndash and in fact the categories typically were those that had been introduced for Latin. An example:

"The boy has broken the toy." = T N1 v V T N2

(It should be clear what categories the letters stand for.) To avoid having to mention hte meaning of words, the categories were established by patterns of co-occurence: since "window" and "toy" can occur in similar places in sentences, they belong to the same grammatical category.

In terms of this parsing, grammatical rules could be formulated such as (omitting many details):

passivisation: T N1 v V T N2 → T N2 v be V-en by T N1

Exercise 2.1.

- Give three examples which fit the sentence formula T N v V.

- Using the same four categories, construct another sentence formula, and give three examples of sentences that fit that formula.

- Now, using Q for a question word as well, construct another sentence formula. Convert your three examples in b. into sentences which fit this formula.

However,

- there are infinitely many possible grammatical sentence structures, and we cannot list them all, let alone have learnt them;

- parsing is too ad hoc, it becomes too complicated as it has to be made up for each case – there simply is not enough of a theory;

- It may be easy to make a rule for passivisation for a simple sentence like: "Joe sees Paul."

But that rule have to be amended for: "Joe sees Paul and Thomas."

And again for: "Joe sees Paul and Thomas was there."

- it used to be assumed that the grammatical categories and rules of Western languages, Latin in particular, could be applied to all languages, but in fact different languages have different categories and require different rules;

- parsing is too simple:

- "Sartre expressed his views on television."

this sentence has a unique parsing, but it is ambiguous (= it has two meanings,) and the ambiguity is grammatical – making a grammatical change eliminates the ambiguity:

"His views were expressed by Sartre on television."

"His views on television were expressed by Sartre."

- "Frank is easy/ eager to please."

The two sentences have he same superficial structure, but quite different meanings: who does the pleasing in each case? Again, the ambiguity can be shown to be grammatical, i.e. there are different grammatical constructions between which parsing fails to distinguish:

"It is easy/ *eager to please Frank."

"Frank is *easy/ eager to please someone."

(An asterisk * before a sentence, or a part of a sentence, indicates that the sentence or the version with that part is not grammatical.)

Exercise 2.2.:

The two sentences in each pair have the same superficial structure. Try to think of grammatical changes that suit one sentence in the pair but not the other.

- "Morten and Ole are Scandinavians." / "Morten and Ole are friends."

- "Miriam and Semira are similar." / "Miriam and Semira are happy."

B. Chomskyan linguistics

Chomsky viewed grammar as the theory of a language, much as in physics we have theories to explain observations. In grammar, what is explained are particular judgments of native speakers:

- grammaticality (= whether a sentence is well-formed or ill-formed,)

- synonymity (= two sentences having the same meaning,)

"A boy is outside." and "There is a boy outside."

ambiguity (= a sentence having two meanings):

"Murdering peasants can be dangerous."

A good theory of grammar, just like a good theory in physics, should enable us to make predictions for a wide range of observations from a small number of principles.

But who are the native speakers whose judgments are to be accepted as evidence? Chomsky and his school took the (at the time) radical step of accepting that any dialect has its own grammar. It turns out that the grammars of non-standard versions of a language are as regular and no simpler than the grammar of 'the standard language'. The distinction between a 'high language' – such as the Queen's English or Hochdeutsch – and dialects is a social distinction, often used for political ends, to the disadvantage of certain social groups; it ain't a linguistic distinction.

Grammar is therefore no longer normative, but descriptive: whether a sentence is grammatically correct may depend on the group of people whose language is being studied.

- Of the following three sentences, different groups in the US have different opinions:

"He told me where the room was." – all Americans consider it correct

"He asked me where the room was." – only white Americans do

"He asked me where was the room." – only black Americans do

- "All of the students weren't present."

– for different groups this sentence is ambiguous, has only one meaning, or only the other.

Exercise 2.3.:

Of each of the following six sentences it has been claimed that it is ambiguous. Write down the possible meanings. Amongst the examples, can you distinguish two kinds of ambiguity?

- "This pig is ready to eat."

- "Don't forget how low Leonard is."

- "Benjamin told Michael that it would be difficult to shave himself."

- "They fed her dog biscuits."

- "I know a taller man than Doug."

- "At last Algernon has arrived."

Chomsky's main insights:

-

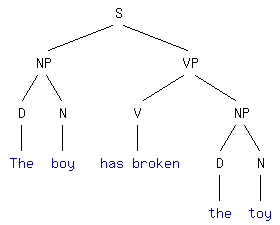

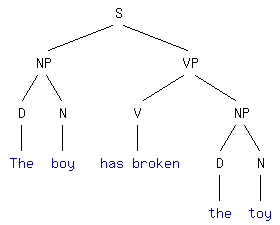

Human speech is not interpreted linearly, as had been assumed (and as computer programs are, line by line, instruction by instruction,) but in terms of 'phrase markers' or 'tree structures', like the one shown on the right for a very simple sentence.

Human speech is not interpreted linearly, as had been assumed (and as computer programs are, line by line, instruction by instruction,) but in terms of 'phrase markers' or 'tree structures', like the one shown on the right for a very simple sentence.

(nodes: S = sentence, NP = noun phrase, VP = verb phrase, D = determiner, N = noun, V = verb)

Phrase markers are theoretical entities, in the same sense as electrons are: we may never be able to observe them directly, but they constitute essential ingredients in some theory – of grammar, or of atomic structure – and any evidence for that theory confirms our acceptance of these entities.

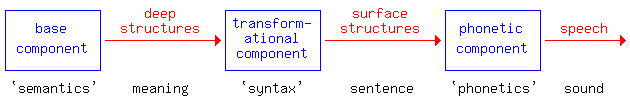

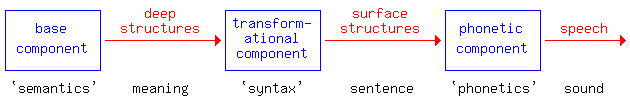

- The phrase markers of the spoken language are the product of transformations that are applied to simpler structures, which more closely represent the meanings of sentences:

'deep structures' —'transformations'→ 'surface structures'

Non-lexical or syntactic, grammatical, amnbiguities can then arise when sentences with different deep structures happen to give rise to the same surface structures. And some observations of require for their explanation the distinction between surface and deep structure.

Consider the following contractions:

"Life is/ Life's worth living." are both grammatical, but

"Norway is less overcrowded than Japan is/ *Japan's."

"Complicated though it is/ *it's, the theory makes sense."

– the contraction is not allowed if what follows "is" in the deep structure has been (re-)moved.

C. Transformational Grammar

Chomsky therefore proposed a model something like the following:

(We will not be considering the phonetic component at all, which has been found to function not unlike the transformational component; nor the role of writing, though that may be a good topic for discussion. The account here will be greatly simplified, omitting many elements of the theory, such as tense – past, future, etc. – to show he overall shape.)

- base component:

The base component generates deep structures, i.e. phrase markers which are semantic representations, representing the meanings in a form perhaps close to our thinking. It is not a part of the brain, but one layer of a model, and it consists of simple rewrite rules:

The simple phrase marker above, for "The boy has broken the toy.", can be seen to be the result of using the following rules (– items in brackets are optional):

S → NP + VP, NP → (D +) N, VP → V (+ NP)

and lexical insertions:

D → "the", "a", "some", ...

N → "boy", "toy", "Frank", "someone", ...

and so on.

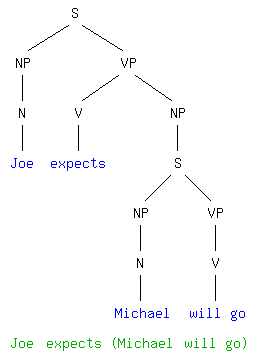

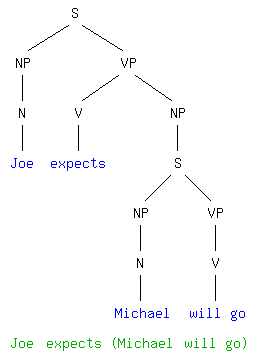

Up to this point, all the phrase markers would be very simple structures. What gives the theory its power is one special rule:

Up to this point, all the phrase markers would be very simple structures. What gives the theory its power is one special rule:

NP → S

– so we can have one sentence embedded in another, as in the example on the right. That phrase marker can be represented more simply as

"Joe expects (Michael will go),"

with the embedded sentence in brackets. This recursiveness gives rise to all the complicated sentences that we are all able to produce and understand, such as:

"The soldiers the President honoured had killed innocent peasants."

although the level of embedding that we can deal with seems to be limited:

"The soldiers the President the demonstrators hated honoured had killed innocent peasants."

Exercise 2.4.:

Using the four re-write rules given above, try to construct 'deep structures' for the following sentences:

- "Frank is easy to please."

- "Frank is eager to please."

(you will need the lexical insertions

N → "Frank", "someone" (= x),

V → "is eager", "is easy".)

- transformational component:

This consists of rules for re-arranging phrase markers, converting deep structures into the sentences as we speak them. A sentence is grammatical, if thre is a deep structure from which it can be derived by these transformational rules.

Some examples:

-

raising:

"Joe expects (Michael will go)" → "Joe expects Michael to go."

(We can see that "Michael" has been moved to the higher sentence, because we can now put it in the passive: ... → "Michael is expected by Joe to go."

-

equi-NP-deletion:

"Reggie intends (Reggie will come)" → "Reggie intends to come."

-

There-insertion:

"A boy is outside" → "There is a boy outside."

-

passivisation

Most of these transformations apply in a certain order, from the lowest level upwards, in a kind of cycle, followed by some post-cyclic transformations.

The power of the theory can be seen from the wide range of grammatically correct structures that can be explained using just a small number of transformations:

"Anthony feared (Bill had seen Charles)"

→ "Anthony feared (Charles had been seen by Bill)" – passivisation

→ "Anthony feared Charles (to have been seen by Bill)" – raising

→ "Charles was feared by Anthony to have been seen by Bill." – passivisation

Each transformation comes with conditions and constraints, determining when it can be applied, and some transformations are optional, others obligatory:

-

optional:

"Joe expects (Michael will go)" → "Joe expects that Michael will go."

or: ...→ "Joe expects Michael to go." – raising

-

obligatory:

The following two sentences are synonymous (= have the same meaning):

"Canadians speak English." → "English is spoken by Canadians."

– so passivisation is optional;

but the following two sentences are not synonymous

"Nobody in this room speaks two languages." /

"Two languages are spoken by nobody in this room."

– so passivisation may be required for the intended meaning.

The following two sentences are synonymous:

"Nixon loves Nixon." → "Nixon loves himself."

– so reflexivisation is optional;

but the following two sentences are not synonymous:

"Only Nixon loves Nixon." / "Only Nixon loves himself."

– so reflexivisation may be required for the intended meaning.

Sometimes one can have direct evidence of the working of the transformational component:

- Children's language acquisition is apparently in the order of transformations, and similarly language deteriorates in the order of transformations.

- The reason that Dutch, apparently, sounds child-like to an Afrikaans-speaker, and vice versa, is that there are some slight differences in the transformations: so each does not hear certain transformations in the other's speech that they would expect from a fully competent speaker of their own language.

Exercise 2.5.:

- Sometimes it is possible to perform something like experiments in linguistics: the phrase "at all", for instance is restricted in its distribution. By considering examples, find a general description of the environments in which it can occur.

- Apply the transformations specified to the deep structures given, (again in a greatly simplified form,) starting at the lowest level, with the most deeply embedded sentence.

- "Barry convinced Nancy (Nancy will run)"

– equi-NP-deletion > passivisation

- "Martha suspected (a boy is outside)"

– There-insertion > raising (> passivisation)

- "Amy thought (Beth believed (Carol irritated Daisy))"

– passivisation > raising > passivisation > raising > passivisation

- Try to think of another sentence, like the one above, which does not have the same meaning when it is turned into the passive.

D. Zooming out: How the Mind Works

Having considered, very very roughly, how transformational grammar explains how we construct – and presumably how we understand – sentences, we can now 'zoom out' and view the human linguistic capabiliy in larger contexts.

-

Transformational grammar allows us to formulate linguistic universals, i.e. aspects that are common to all human languages. These had always been sought for in earlier theories.

-

It appears that the shape of the theory – with deep structures generated by simple re-write rules, and surface structures derived from these deep structures by transformations that apply in a cycle from the lowest level up – is (or can be the same) for all languages.

The precise form of the deep structure is under-determined in any language: the transformations can be formulated to fit different forms. But considering a variety of languages, one can choose the form that allows for the simplest transformations, and that on present evidence seems to be a verb-first form: V S O.

-

There seem to be certain constraints on transformations that apply across all languages, the main one of these being the Conjoint Structure Contstraint. (A 'conjoint structure' is one produced by a rule like Y → X and X.)

"Sam kissed Sue." → "Sue was kissed by Sam." – but not:

"Sam kissed Sue and her sister."

→ "* Sue was kissed and her sister by Sam."

It is a consequence of the CSC that there cannot be a word in any language that means "blue and".

-

If all human languages have essentially the same structure, this would support Chomsky's innateness hypothesis, according to which human beings are born with the ability to learn a language: what is innate is not the grammar of any particular language, of course, but the general shape of the theory, as described above. This view is called 'rationalism'; the opposite view, that the mind is a tabula rasa (= blank slate) at birth and all knowledge is derived solely from experience, is called 'empiricism'.

There are other arguments for the rationalist position, apart from the universality of the theory of language:

-

Children learn a language remarkably quickly, much faster than they would by rote or by conditioning at a stage when they are not capable of organised learning.

-

The operations required to make and understand sentences are very abstract – even adults have difficulties explaining them; and how could children even begin to structure the sequence of sounds that they hear? One need only compare the way children so easily acquire a language with the way in which they have to learn arithemtic to see that different kinds of mechanisms must be involved.

-

Children don't learn language by heart, but can learn from individual instances – a bit like hypothesis testing in science; this suggests that they fit observations into a pre-existing pattern.

-

The evidence available to the child is typically extremely organised, often even misleading, as the parents make mistakes or themselves are not native speakers

-

This innate theory of language may then be a part of a specifically human symbolic capacity. What evidence is there for this?

-

The ability to learn a language seems remarkably uniform, with only minor differences in degree between individuals: it correlates with, but is not dependent on, other aspects of mental functioning.

-

The different languages of the world function similarly, and are of comparable degree of grammatical complexity – there is no 'half-language' spoken anywhere.

For instance, even though different languages use very different sounds, the number of distinctions required between all the sounds in one language, like that between 'voiced' consonants ("b, d, g, s") and 'unvoiced' consonants ("p, t, k, z") in English and Japanese, is about the same.

-

All human societies that have ever been found seem to have had not only a language, but also certain other kinds of symbolic systems: something like art, or music, or rituals. That symbolic capacity is presumably indivisable, comes as one 'lump'.

(A strong recommendation: The route from an investigation of language and its grammar to the functioning and capabilites of the human mind, and what the insights gained mean for our moral choices in society, has been taken in a series of remarkably clear and remarkably entertaining books by Steven Pinker, a linguist at MIT: The Language Instinct, 1994, How the Mind Works, 1997, and The Blank Slate, 2002.)

3.

[ structuralism ]

4.

Teaching Notes:

Exercise 1.1.:

- The same person may, by different people or by the same person at different times, be called "Mr. Smith", "John" or "Johnny". What each of these these implies about the relationship of that person to the speaker is part of the connotations of the names.

Similarly it has political connotations whether one calls a certain country "Burma" or by its now official name "Myanmar".

This chapter is still incomplete, but slowly growing.

This chapter is still incomplete, but slowly growing.

This chapter is still incomplete, but slowly growing.

This chapter is still incomplete, but slowly growing.

For which of the two shapes shown on the right would you choose the name "malumo", and which one would you call "taketa"? Do all the members of the group agree?

For which of the two shapes shown on the right would you choose the name "malumo", and which one would you call "taketa"? Do all the members of the group agree?

Human speech is not interpreted linearly, as had been assumed (and as computer programs are, line by line, instruction by instruction,) but in terms of 'phrase markers' or 'tree structures', like the one shown on the right for a very simple sentence.

Human speech is not interpreted linearly, as had been assumed (and as computer programs are, line by line, instruction by instruction,) but in terms of 'phrase markers' or 'tree structures', like the one shown on the right for a very simple sentence.

Up to this point, all the phrase markers would be very simple structures. What gives the theory its power is one special rule:

Up to this point, all the phrase markers would be very simple structures. What gives the theory its power is one special rule: